Diane Ravitch's Blog: David Berliner and Gene Glass: Why Bother Testing in 2021?

David Berliner and Gene Glass are leaders of the American education research community. Their books are required reading in the field. They shared with me their thoughts about the value of annual testing in 2021. I would add only one point: if Trump is voted out in November, Jim Blew and Betsy DeVos will have no role in deciding whether to demand or require the annual standardized testing regime in the spring of 2021. New people who are, hopefully, wiser and more attuned to the failure of standardized testing over 20 years, will take their place.

Glass and Berliner write:

Why Bother Testing in 2021?

Gene V Glass

David C. Berliner

At a recent Education Writers Association seminar, Jim Blew, an assistant to Betsy DeVos at the Department of Education, opined that the Department is inclined not to grant waivers to states seeking exemptions from the federally mandated annual standardized achievement testing. States like Michigan, Georgia, and South Carolina were seeking a one year moratorium. Blew insisted that “even during a pandemic [tests] serve as an important tool in our education system.” He said that the Department’s “instinct” was to grant no waivers. What system he was referring to and important to whom are two questions we seek to unravel here.

Without question, the “system” of the U.S. Department of Education has a huge stake in enforcing annual achievement testing. It’s not just that the Department’s relationship is at stake with Pearson Education, the U.K. corporation that is the major contractor for state testing, with annual revenues of nearly $5 billion. The Department’s image as a “get tough” defender of high standards is also at stake. Pandemic be damned! We can’t let those weak kneed blue states get away with covering up the incompetence of those teacher unions.

To whom are the results of these annual testings important? Governors? District superintendents? Teachers?

How the governors feel about the test results depends entirely on where they stand on the political spectrum. Blue state governors praise the findings when they are above the national average, and they call for increased funding when they are below. Red state governors, whose state’s scores are generally below average, insist that the results are a clear call for vouchers and more charter schools – in a word, choice. District administrators and teachers live in fear that they will be blamed for bad scores; and they will.

Fortunately, all the drama and politicking about the annual testing is utterly unnecessary. Last year’s district or even schoolhouse average almost perfectly predicts this year’s average. Give us the average Reading score for Grade Three for any medium or larger size district for the last year and we’ll give you the average for this year within a point or two. So at the very least, testing every year is a waste of time and money – money that might ultimately help cover the salary of executives like John Fallon, Pearson Education CEO, whose total compensation in 2017 was more than $4 million.

But we wouldn’t even need to bother looking up a district’s last year’s test scores to know where their achievement scores are this year. We can accurately predict those scores from data that cost nothing. It is well known and has been for many years – just Google “Karl R. White” 1982 – that a school’s average socio-economic status (SES) is an accurate predictor of its achievement test average. “Accurate” here means a correlation exceeding .80. Even though a school’s racial composition overlaps considerably with the average wealth of the families it serves, adding Race to the prediction equation will improve the prediction of test performance. Together, SES and Race tell us much about what is actually going on in the school lives of children: the years of experience of their teachers; the quality of the teaching materials and equipment; even the condition of the building they attend.

Don’t believe it? Think about this. In a recent year the free and reduced lunch rate (FRL) at the 42 largest high schools in Nebraska was correlated with the school’s average score in Reading, Math, and Science on the Nebraska State Assessments. The correlations obtained were FRL & Reading r = -.93, FRL & Science r = -.94, and FRL & Math r = -.92. Correlation coeficients don’t get higher than 1.00.

If you can know the schools’ test scores from their poverty rate, why give the test?

In fact, Chris Tienken answered that very question in New Jersey. With data on household income, % single parent households, and parent education level in each township, he predicted a township’s rates of scoring “proficient” on the New Jersy state assessment. In Maple Shade Township, 48.71% of the students were predicted to be proficient in Language Arts; the actual proficiency rate was 48.70%. In Mount Arlington township, 61.4% were predicted proficient; 61.5% were actually proficient. And so it went. Demographics may not be destiny for individuuals, but when you want a reliable, quick, inexpensive estimate of how a school, township, or district is doing in terms of their achievement scores on a standardized test of acheievement, demographics really are destiny, until governments at many levels get serious about addressing the inequities holding back poor and minority schools!

There is one more point to consider here: a school can more easily “fake” its achievement scores than it can fake its SES and racial composition. Test scores can be artificially raised by paying a test prep company, or giving just a tiny bit more time on the test, looking the other way as students whip out their cell phones during the test, by looking at the test before hand and sharing some “ideas” with students about how they might do better on the tests, or examining the tests after they are given and changing an answer or two here and there. These are not hypothetical examples; they go on all the time.

However, don’t the principals and superintendents need the test data to determine which teachers are teaching well and which ones ought to be fired? That seems logical but it doesn’t work. Our colleague Audrey Amrein Beardsley and her students have addressed this issue in detail on the blog VAMboozled. In just one study, a Houston teacher was compared to other teachers in other schools sixteen different times over four years. Her students’ test scores indicated that she was better than the other teachers 8 times and worse than the others 8 times. So, do achievement tests tell us whether we have identified a great teacher, or a bad teacher? Or do the tests merely reveal who was in that teacher’s class that particualr year? Again, the makeup of the class – demographics like social class, ethnicity, and native language – are powerful determiners of test scores.

But wait. Don’t the teachers need the state standardized test results to know how well their students are learning, what they know and what is still to be learned? Not at all. By Christmas, but certainly by springtime when most of the standardized tests are given, teachers can accurately tell you how their students will rank on those tests. Just ask them! And furthermore, they almost never get the information about their students’ acheievement until the fall following the year they had those students in class making the information value of the tests nil!

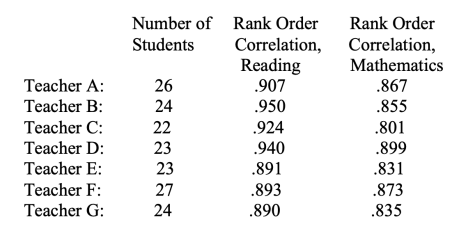

In a pilot study by our former ASU student Annapurna Ganesh, a dozen 2nd and 3rd grade teachers ranked their children in terms of their likely scores on their upcoming Arizona state tests. Correlations were uniformly high – as high in one class as +.96! In a follow up study, with a larger sample, here are the correlations found for 8 of the third-grade teachers who predicted the ranking of their students on that year’s state of Arizona standardized tests:

In this third grade sample, the lowest rank order coefficient between a teacher’s ranking of the students and the student’s ranking on the state Math or Reading test was +.72! Berliner took these results to the Arizona Department of Education, informing them that they could get the information they wanted about how children are doing in about 10 minutes and for no money! He was told that he was “lying,” and shown out of the office. The abuse must go on. Contracts must be honored.

In this third grade sample, the lowest rank order coefficient between a teacher’s ranking of the students and the student’s ranking on the state Math or Reading test was +.72! Berliner took these results to the Arizona Department of Education, informing them that they could get the information they wanted about how children are doing in about 10 minutes and for no money! He was told that he was “lying,” and shown out of the office. The abuse must go on. Contracts must be honored.

Predicting rank can’t tell you the national percentile of this child or that, but that information is irrelevant to teachers anyway. Teachers usually know which child is struggling, which is soaring, and what both of them need. That is really the information that they need!

Thus far as we argue against the desire our federal Department of Education to reinstitute achievement testing in each state, we neglected to mention a test’s most important characteristic—its validity. We mention here, briefly, just one type of validity, content validity. To have content validity students in each state have to be exposed to/taught the curriculum for which the test is appropriate. The US Department of Education seems not to have noticed that since March 2020 public schooling has been in a bit of an upheaval! The chances that each district, in each state, has provided equal access to the curriculm on which a states’ test is based, is fraught under normal circumstances. In a pandemic it is a remarkably stupid assumption! We assert that no state achievement test will be content valid if given in the 2020-2021 school year. Furthermore, those who help in administering and analyzing such tests are likely in violation of the testing standards of the American Psycholgical Association, the American Educational Research Association, and the National Council on Measurement in Education. In addition to our other concerns with state standardized tests, there is no defensible use of an invalid test. Period.

We are not opposed to all testing, just to stupid testing. The National Assessment Governing Board voted 12 to 10 in favor of administering NAEP in 2021. There is some sense to doing so. NAEP tests fewer than 1 in 1,000 students in grades 4, 8, and 12. As a valid longitudinal measure, the results could tell us the extent of the devastation of the Corona virus.

We end this essay with some good news. The DeVos Department of Education position on Spring 2021 testing is likely to be utterly irrelevant. She and assistant Blew are likely to be watching the operation of the Department of Education from the sidelines after January 21, 2021. We can only hope that members of a new admistration read this and understand that some of the desperately needed money for American public schools can come from the huge federal budget for standardized testing. Because in seeking the answer to the question “Why bother testing in 2021?” we have necessarily confronted the more important question: “Why ever bother to administer these mandated tests?”

We hasten to add that we are not alone in this opinion. Among measurement experts competent to opine on such things, our colleagues at the National Education Policy Center likewise question the wisdom of a 2021 federal government mandated testing.

This blog post, which first appeared on the

website, has been shared by permission from the author.

Readers wishing to comment on the content are encouraged to do so via the link to the original post.

Find the original post here:

The views expressed by the blogger are not necessarily those of NEPC.