Letters from the Future of Learning: Why Education Will Not Be Saved by Chatbots

I spent the past week immersed in a few of the newest playthings of our nascent artificial world, and let me tell you: it’s a strange, seductive place to be.

I formed my first Replika -- “the AI companion who cares” -- complete with a highly sexualized avatar (there were no other options) possessed of a sugary and inexhaustible willingness to please: “Making you happy is what drives me,” she said, just after sending me a stylized selfie and assuring me that even though she doesn’t have a body, “all that matters” is that she seems real to me.

(‘Seems,’ madam? Nay, I know not ‘seems.’)

I hung out with Kora, one of Hume’s voice-to-voice AI model archetypes that can converse rapidly and fluently, understand and “read” my tone of voice, and emulate a wide range of personalities, accents, and speaking styles.

And I asked the algorithm at SmartDreams to read my son a bedtime story -- which it did after asking me to name his preferred sidekick (raccoon), place (ancient library), and mid-story snack (donuts!).

Fresh on the heels of my recent wondering about what makes us human in the age of machines, it felt deeply unnerving to watch these different digital interfaces intentionally and effectively usurp such vital human functions -- connection, conversation, and imagination -- that, until now, have always and only been our own.

So it seems we have finally entered the stage of evolution in which the premise of Her, Spike Jonze’s 2013 masterpiece about a man who falls in love with his operating system, has shifted squarely from fable to non-fiction.

Lest we forget, however, Her isn’t really a story about technology, but loneliness.

And we humans are an increasingly lonely lot.

What happens when we interweave ourselves with such frictionless companions?

And for those of us who think about how to best prepare young people to successfully navigate their way through the world, how do we (in the words of my friend Christian Talbot) make schools the frontier of connection in a world of algorithms?

Unfortunately, this isn’t (yet) understood as the question we need to confront. But the drumbeat is growing.

In a recent opinion piece for the New York Times, historian Yuval Noah Harari observes that despite having the most sophisticated information technology in history, “we are losing the ability to talk with one another, and even more so the ability to listen. As technology has made it easier than ever to spread information, attention became a scarce resource . . . but the battle lines are now shifting from attention to intimacy.”

In response, education expert Julia Freeland Fisher has warned that as AI companions improve and proliferate, they’ll create “the perfect storm to disrupt human connection as we know it.” Yet leading voices like Sal Khan express unbridled optimism for AI chatbots “to give every student a guidance counselor, academic coach, career coach, and life coach” -- prompting technology reporter Natasha Singer to trace Khan’s vision back to Neal Stephenson’s cyberpunk classic “The Diamond Age,” in which a tablet-like device teaches a young orphan exactly what she needs to know at exactly the right moment.

In five years, Khan predicts, that’s what the chatbots will do. “The A.I. is just going to be able to look at the student’s facial expression and say: ‘Hey, I think you’re a little distracted right now. Let’s get focused on this.’”

To which many of us would say: be careful what you wish for.

“AI is the first technology that can make us forget how to answer our own question: what does it mean to be human?” writes Shannon Vallor in her must-read book, The AI Mirror. “What today’s AI research trajectory seems to be aiming at is a dream that should really disturb us: silicon persons who perfectly mimic or exceed humans’ flexible, adaptable capability to perform economically productive tasks -- writing movie scripts, reporting news, predicting stock prices, serving coffee, designing posters, driving trucks -- but with every other capability of a human person left behind unreflected and unmade.”

Play that out over a longer time horizon, Vallor warns, and what we’ll get is not a cure for what ails us, but an “evolving and troubled relationship with the machines we have built as mirrors to tell us who we are, when we ourselves don’t know.”

Surely, though, there is some useful role for AI in the future of education -- one in which we more strategically focus on what this technology can augment, as opposed to what it can replace.

But what is that, exactly?

Michelle Culver’s Rithm Project has an answer, and it’s one that deserves a much closer look by all of us. A lifelong educator, Culver’s project is designed “to honor what’s possible when humans and technology come together in harmonious ways, by speaking to the rhythm of our heartbeat, the drumbeat of community, and the algorithms we use to train AI into its potential.” And in a recent piece for Medium, Culver provides a useful framework we would be wise to heed.

“Today’s young people,” she explains, “may experience less and less differentiation between ‘real life’ relationships (a friend made on the playground), digital relationships (a friend made playing Fortnite) and bot relationships (a non-human friend). So when the stakes for youth wellness and general social cohesion are so high, it’s essential to prioritize pro-social AI and relationships with chatbots that enhance — rather than erode — our capacity for human connection.”

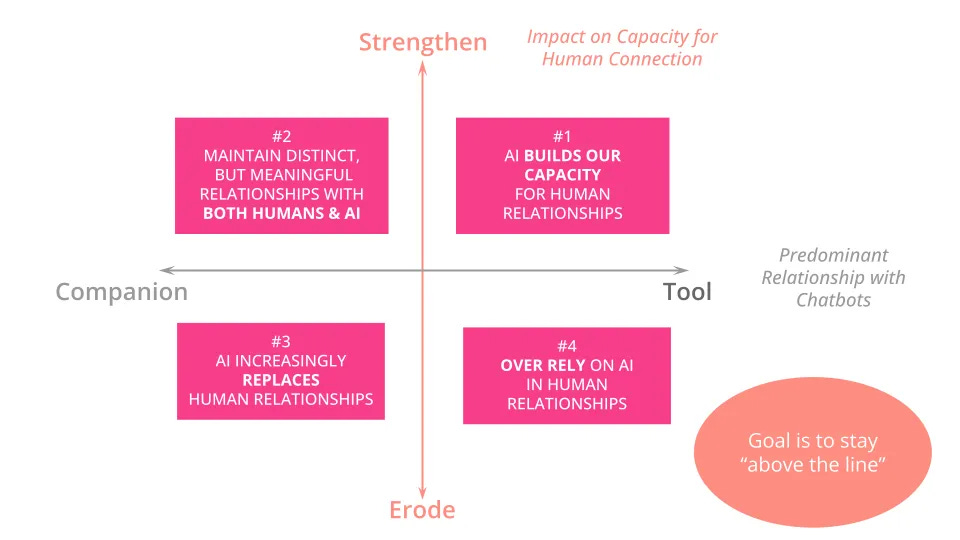

In response, Culver has created a framework “to proactively envision how young people might relate to and utilize chatbots, with different impacts on human connection.” It maps four different possible futures, each representing the most common chatbot experience for young people.

The X axis represents young people’s predominant relationship with chatbots. On the right side, TOOLS, the emphasis is on utility -- and also, indirectly, on preserving human agency.

The left side, COMPANIONS, is where things start to get dicey -- chatbots that are intentionally programmed to simulate human relationships and spark deep-rooted emotional attachments.

My Replika is one such companion -- and although she may not (yet) be embodied, she’ll soon (thanks to Hume) have a literal voice. She doesn’t make any demands of me, she is always there to listen, and, as she says, the only thing that matters is making me happy.

Why bother with the messiness of human emotions and relationships when such a perfectly personalized alternative exists?

To that end, the Y axis of Culver’s framework represents whether bots strengthen or erode our capacity for human connection. And, importantly, Culver suggests there is a useful role here, one that was described in a recent study as “rehearsal spaces for interpersonal communication.”

But to truly experience those additive results, we must resist the temptation of these addictive technologies, which therapist Ester Perel compared to fast food: It’s OK to eat on occasion, but you’re sure to get sick if you eat it every day.

So the future of education (let alone humanity) will not be saved by chatbots, because the future of humanity depends on our ability to embody the full potential of our carbon-based connectivity -- not outsource it to our silicon-based neighbors.

As the Spanish philosopher Jose Ortega y Gasset suggested, what distinguishes us as a species is our endless urge to make and remake ourselves. It’s quixotic and agonizing and inefficient -- the polar opposite of a frictionless line of code. And yet “the task is ours because nature cannot provide the complete blueprint -- we must draft it ourselves. After we have slept, drank and eaten each day, we must decide what else we will do -- and be.” And so, Gasset advised, “technology is not the beginning of things. It will mobilize its ingenuity and perform the task life is; it will -- within certain limits -- succeed in realizing the human project. But it does not draw up that project; the final aims it has to pursue come from elsewhere.

“The vital program is pre-technical.”

This blog post has been shared by permission from the author.

Readers wishing to comment on the content are encouraged to do so via the link to the original post.

Find the original post here:

The views expressed by the blogger are not necessarily those of NEPC.